实例介绍

【实例截图】

【核心代码】

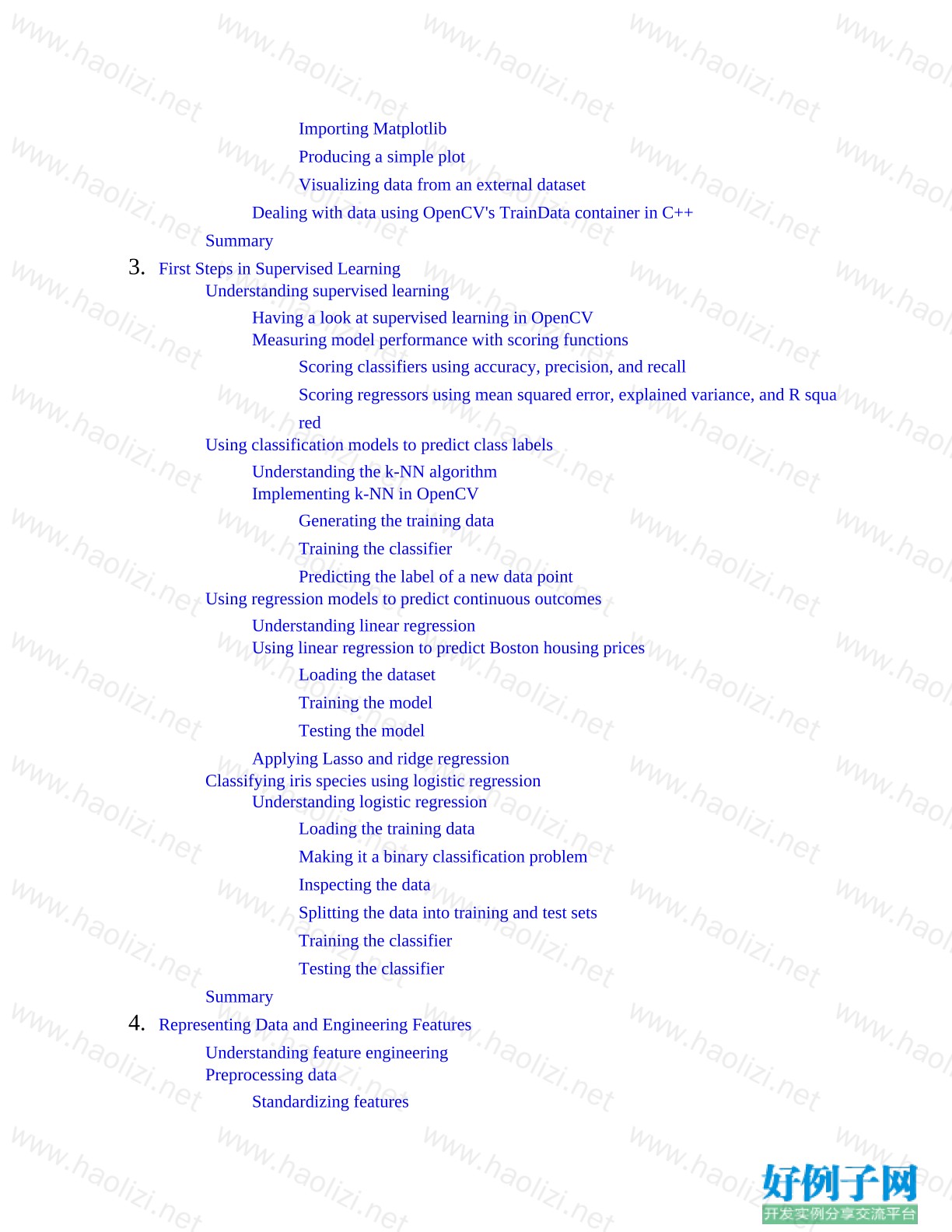

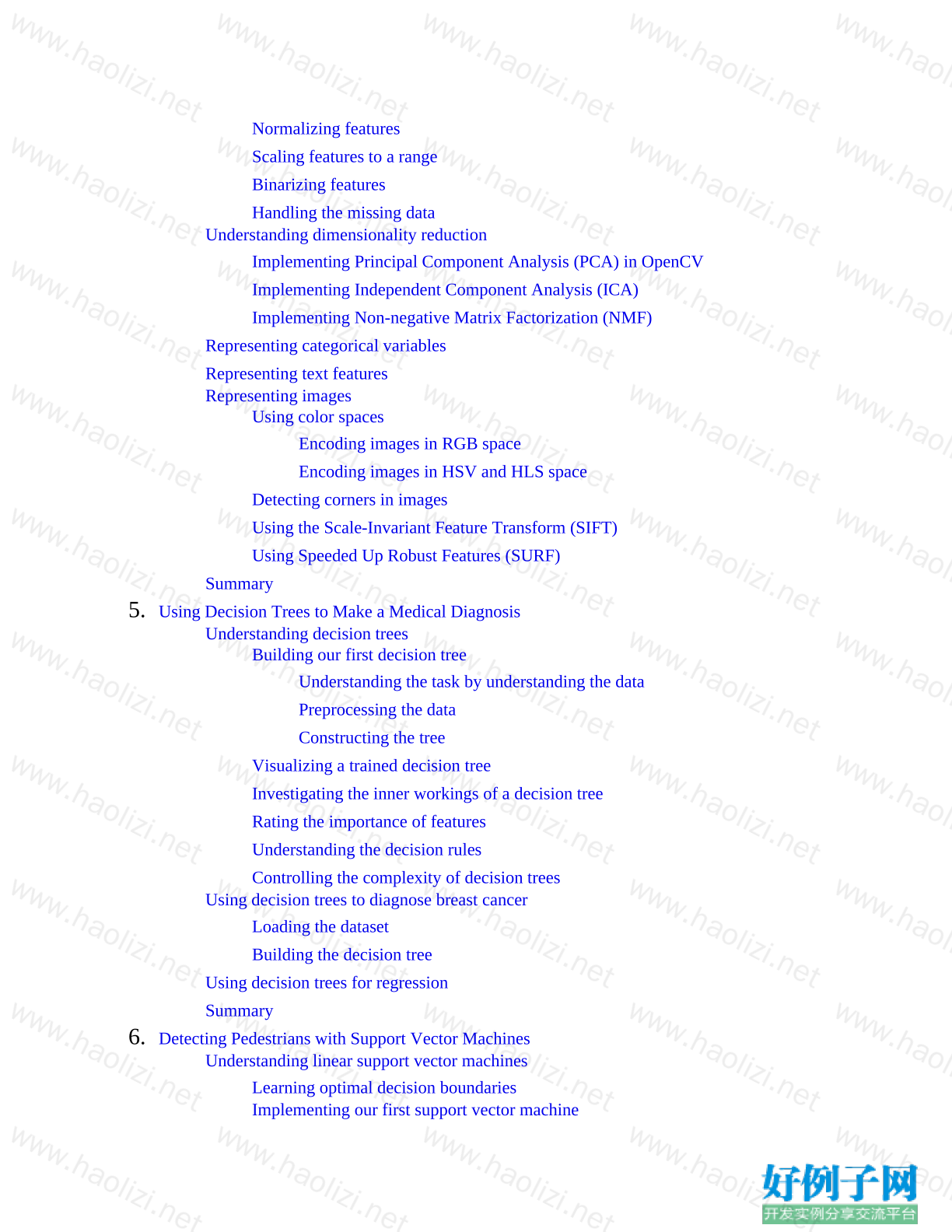

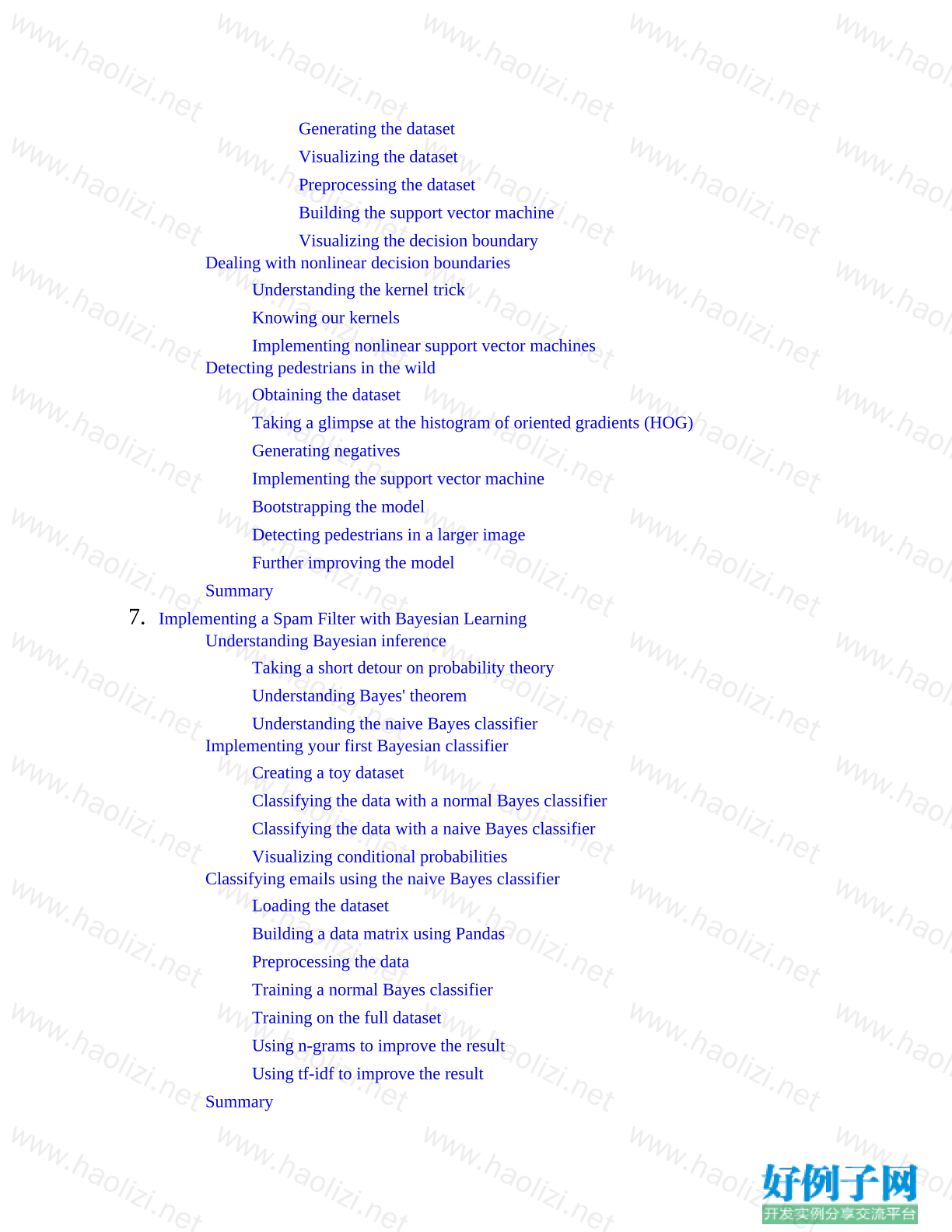

Table of Contents

Preface

What this book covers

What you need for this book

Who this book is for

Conventions

Reader feedback

Customer support

Downloading the example code

Errata

Piracy

Questions

1. A Taste of Machine Learning

Getting started with machine learning

Problems that machine learning can solve

Getting started with Python

Getting started with OpenCV

Installation

Getting the latest code for this book

Getting to grips with Python's Anaconda distribution

Installing OpenCV in a conda environment

Verifying the installation

Getting a glimpse of OpenCV's ML module

Summary

2. Working with Data in OpenCV and Python

Understanding the machine learning workflow

Dealing with data using OpenCV and Python

Starting a new IPython or Jupyter session

Dealing with data using Python's NumPy package

Importing NumPy

Understanding NumPy arrays

Accessing single array elements by indexing

Creating multidimensional arrays

Loading external datasets in Python

Visualizing the data using Matplotlib

Importing Matplotlib

Producing a simple plot

Visualizing data from an external dataset

Dealing with data using OpenCV's TrainData container in C

Summary

3. First Steps in Supervised Learning

Understanding supervised learning

Having a look at supervised learning in OpenCV

Measuring model performance with scoring functions

Scoring classifiers using accuracy, precision, and recall

Scoring regressors using mean squared error, explained variance, and R squa

red

Using classification models to predict class labels

Understanding the k-NN algorithm

Implementing k-NN in OpenCV

Generating the training data

Training the classifier

Predicting the label of a new data point

Using regression models to predict continuous outcomes

Understanding linear regression

Using linear regression to predict Boston housing prices

Loading the dataset

Training the model

Testing the model

Applying Lasso and ridge regression

Classifying iris species using logistic regression

Understanding logistic regression

Loading the training data

Making it a binary classification problem

Inspecting the data

Splitting the data into training and test sets

Training the classifier

Testing the classifier

Summary

4. Representing Data and Engineering Features

Understanding feature engineering

Preprocessing data

Standardizing features

Normalizing features

Scaling features to a range

Binarizing features

Handling the missing data

Understanding dimensionality reduction

Implementing Principal Component Analysis (PCA) in OpenCV

Implementing Independent Component Analysis (ICA)

Implementing Non-negative Matrix Factorization (NMF)

Representing categorical variables

Representing text features

Representing images

Using color spaces

Encoding images in RGB space

Encoding images in HSV and HLS space

Detecting corners in images

Using the Scale-Invariant Feature Transform (SIFT)

Using Speeded Up Robust Features (SURF)

Summary

5. Using Decision Trees to Make a Medical Diagnosis

Understanding decision trees

Building our first decision tree

Understanding the task by understanding the data

Preprocessing the data

Constructing the tree

Visualizing a trained decision tree

Investigating the inner workings of a decision tree

Rating the importance of features

Understanding the decision rules

Controlling the complexity of decision trees

Using decision trees to diagnose breast cancer

Loading the dataset

Building the decision tree

Using decision trees for regression

Summary

6. Detecting Pedestrians with Support Vector Machines

Understanding linear support vector machines

Learning optimal decision boundaries

Implementing our first support vector machine

Generating the dataset

Visualizing the dataset

Preprocessing the dataset

Building the support vector machine

Visualizing the decision boundary

Dealing with nonlinear decision boundaries

Understanding the kernel trick

Knowing our kernels

Implementing nonlinear support vector machines

Detecting pedestrians in the wild

Obtaining the dataset

Taking a glimpse at the histogram of oriented gradients (HOG)

Generating negatives

Implementing the support vector machine

Bootstrapping the model

Detecting pedestrians in a larger image

Further improving the model

Summary

7. Implementing a Spam Filter with Bayesian Learning

Understanding Bayesian inference

Taking a short detour on probability theory

Understanding Bayes' theorem

Understanding the naive Bayes classifier

Implementing your first Bayesian classifier

Creating a toy dataset

Classifying the data with a normal Bayes classifier

Classifying the data with a naive Bayes classifier

Visualizing conditional probabilities

Classifying emails using the naive Bayes classifier

Loading the dataset

Building a data matrix using Pandas

Preprocessing the data

Training a normal Bayes classifier

Training on the full dataset

Using n-grams to improve the result

Using tf-idf to improve the result

Summary

8. Discovering Hidden Structures with Unsupervised Learning

Understanding unsupervised learning

Understanding k-means clustering

Implementing our first k-means example

Understanding expectation-maximization

Implementing our own expectation-maximization solution

Knowing the limitations of expectation-maximization

First caveat: No guarantee of finding the global optimum

Second caveat: We must select the number of clusters beforehand

Third caveat: Cluster boundaries are linear

Fourth caveat: k-means is slow for a large number of samples

Compressing color spaces using k-means

Visualizing the true-color palette

Reducing the color palette using k-means

Classifying handwritten digits using k-means

Loading the dataset

Running k-means

Organizing clusters as a hierarchical tree

Understanding hierarchical clustering

Implementing agglomerative hierarchical clustering

Summary

9. Using Deep Learning to Classify Handwritten Digits

Understanding the McCulloch-Pitts neuron

Understanding the perceptron

Implementing your first perceptron

Generating a toy dataset

Fitting the perceptron to data

Evaluating the perceptron classifier

Applying the perceptron to data that is not linearly separable

Understanding multilayer perceptrons

Understanding gradient descent

Training multi-layer perceptrons with backpropagation

Implementing a multilayer perceptron in OpenCV

Preprocessing the data

Creating an MLP classifier in OpenCV

Customizing the MLP classifier

Training and testing the MLP classifier

Getting acquainted with deep learning

Getting acquainted with Keras

Classifying handwritten digits

Loading the MNIST dataset

Preprocessing the MNIST dataset

Training an MLP using OpenCV

Training a deep neural net using Keras

Preprocessing the MNIST dataset

Creating a convolutional neural network

Fitting the model

Summary

10. Combining Different Algorithms into an Ensemble

Understanding ensemble methods

Understanding averaging ensembles

Implementing a bagging classifier

Implementing a bagging regressor

Understanding boosting ensembles

Implementing a boosting classifier

Implementing a boosting regressor

Understanding stacking ensembles

Combining decision trees into a random forest

Understanding the shortcomings of decision trees

Implementing our first random forest

Implementing a random forest with scikit-learn

Implementing extremely randomized trees

Using random forests for face recognition

Loading the dataset

Preprocessing the dataset

Training and testing the random forest

Implementing AdaBoost

Implementing AdaBoost in OpenCV

Implementing AdaBoost in scikit-learn

Combining different models into a voting classifier

Understanding different voting schemes

Implementing a voting classifier

Summary

11. Selecting the Right Model with Hyperparameter Tuning

Evaluating a model

Evaluating a model the wrong way

Evaluating a model in the right way

Selecting the best model

Understanding cross-validation

Manually implementing cross-validation in OpenCV

Using scikit-learn for k-fold cross-validation

Implementing leave-one-out cross-validation

Estimating robustness using bootstrapping

Manually implementing bootstrapping in OpenCV

Assessing the significance of our results

Implementing Student's t-test

Implementing McNemar's test

Tuning hyperparameters with grid search

Implementing a simple grid search

Understanding the value of a validation set

Combining grid search with cross-validation

Combining grid search with nested cross-validation

Scoring models using different evaluation metrics

Choosing the right classification metric

Choosing the right regression metric

Chaining algorithms together to form a pipeline

Implementing pipelines in scikit-learn

Using pipelines in grid searches

Summary

12. Wrapping Up

Approaching a machine learning problem

Building your own estimator

Writing your own OpenCV-based classifier in C

Writing your own scikit-learn-based classifier in Python

Where to go from here?

Summary

小贴士

感谢您为本站写下的评论,您的评论对其它用户来说具有重要的参考价值,所以请认真填写。

- 类似“顶”、“沙发”之类没有营养的文字,对勤劳贡献的楼主来说是令人沮丧的反馈信息。

- 相信您也不想看到一排文字/表情墙,所以请不要反馈意义不大的重复字符,也请尽量不要纯表情的回复。

- 提问之前请再仔细看一遍楼主的说明,或许是您遗漏了。

- 请勿到处挖坑绊人、招贴广告。既占空间让人厌烦,又没人会搭理,于人于己都无利。

关于好例子网

本站旨在为广大IT学习爱好者提供一个非营利性互相学习交流分享平台。本站所有资源都可以被免费获取学习研究。本站资源来自网友分享,对搜索内容的合法性不具有预见性、识别性、控制性,仅供学习研究,请务必在下载后24小时内给予删除,不得用于其他任何用途,否则后果自负。基于互联网的特殊性,平台无法对用户传输的作品、信息、内容的权属或合法性、安全性、合规性、真实性、科学性、完整权、有效性等进行实质审查;无论平台是否已进行审查,用户均应自行承担因其传输的作品、信息、内容而可能或已经产生的侵权或权属纠纷等法律责任。本站所有资源不代表本站的观点或立场,基于网友分享,根据中国法律《信息网络传播权保护条例》第二十二与二十三条之规定,若资源存在侵权或相关问题请联系本站客服人员,点此联系我们。关于更多版权及免责申明参见 版权及免责申明

网友评论

我要评论