实例介绍

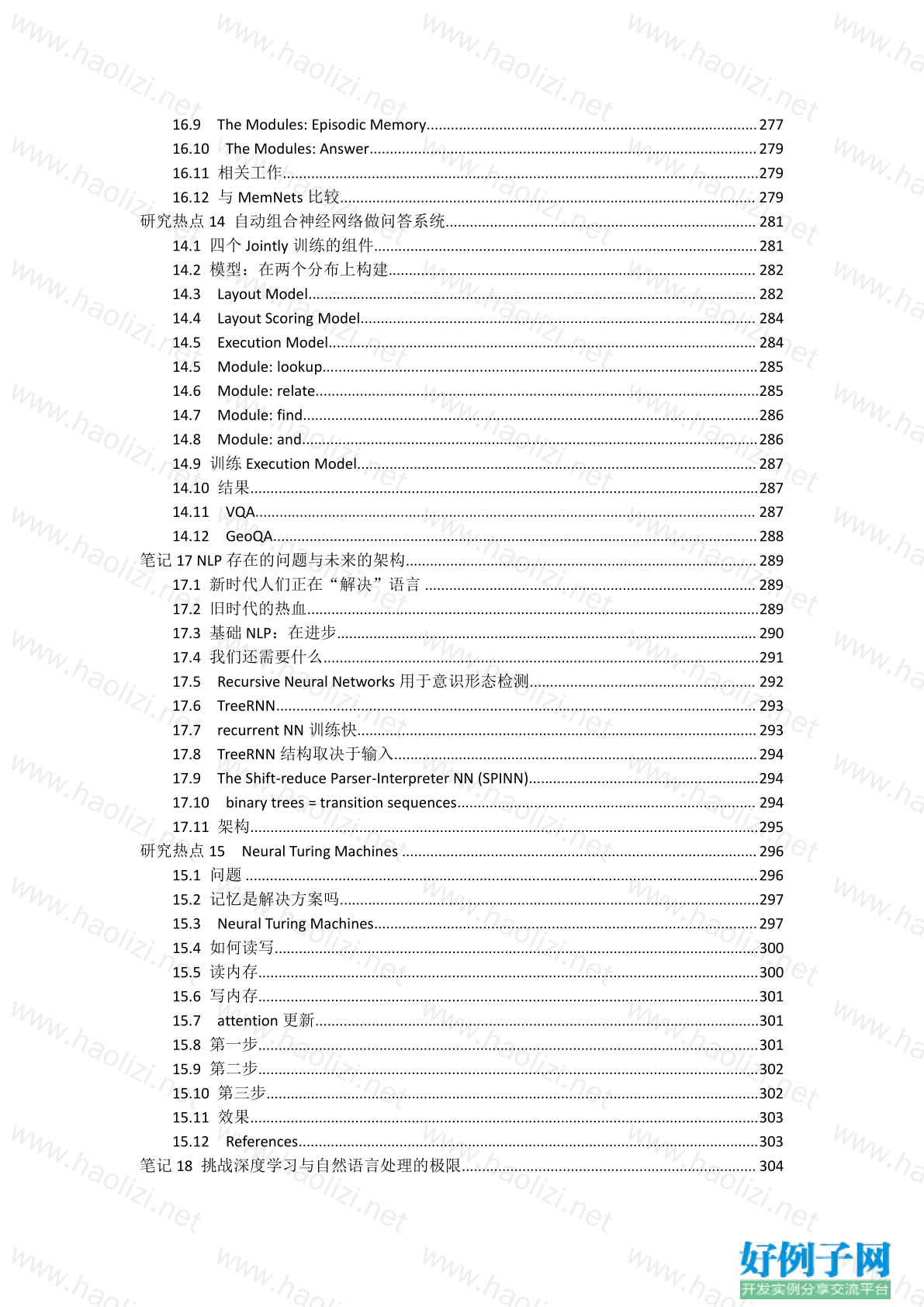

【实例简介】斯坦福CS224n_自然语言处理与深度学习_笔记_hankcs

【实例截图】

【核心代码】

目 录

笔记 1 自然语言处理与深度学习简介..........................................................................................13

1.1 新旧 CS224 对比................................................................................................................13

1.2 CS224 资料.......................................................................................................................14

1.3 什么是自然语言处理........................................................................................................15

1.4 自然语言处理应用............................................................................................................16

1.5 人类语言的特殊之处........................................................................................................17

1.6 什么是深度学习................................................................................................................17

1.7 “深度学习”的历史............................................................................................................. 19

1.8 为什么需要研究深度学习................................................................................................19

1.9 语音识别中的深度学习....................................................................................................19

1.20 计算机视觉中的深度学习............................................................................................. 20

1.21 课程相关..........................................................................................................................21

1.22 为什么 NLP 难................................................................................................................. 21

1.23 Deep NLP = Deep Learning NLP.................................................................................. 21

1.24 word vector.................................................................................................................... 22

1.25 NLP 表示层次:形态级别............................................................................................ 22

1.26 NLP 工具:句法分析.................................................................................................... 22

1.27 NLP 语义层面的表示.................................................................................................... 23

1.28 情感分析..........................................................................................................................23

1.29 QA...................................................................................................................................24

1.30 客服系统..........................................................................................................................25

1.31 机器翻译..........................................................................................................................26

1.32 结论:所有层级的表示都是向量................................................................................. 27

研究热点 1 一个简单但很难超越的 Sentence Embedding 基线方法.........................................29

1.1 句子 Embedding 动机....................................................................................................... 29

1.2 已有方法............................................................................................................................30

1.3 新方法................................................................................................................................31

1.4 概率论解释........................................................................................................................32

1.5 效果....................................................................................................................................32

笔记 2 词的向量表示:word2vec..................................................................................................34

2.1 如何表示一个词语的意思................................................................................................34

2.2 计算机如何处理词语的意思........................................................................................... 34

2.3 discrete representation 的问题.......................................................................................34

2.4 从 symbolic representations 到 distributed representations...........................................34

2.5 Distributional similarity based representations.............................................................. 35

2.6 通过向量定义词语的含义................................................................................................35

2.7 学习神经网络 word embeddings 的基本思路................................................................35

2.8 直接学习低维词向量........................................................................................................36

2.9 word2vec 的主要思路.....................................................................................................36

2.10 Skip-gram 预测.............................................................................................................. 36

2.11 word2vec 细节...............................................................................................................37

2.12 目标函数细节..................................................................................................................372.13 Word2Vec 细节..............................................................................................................37

2.14 点积..................................................................................................................................37

2.15 Softmax function:从实数空间到概率分布的标准映射方法................................... 38

2.16 Skipgram.........................................................................................................................38

2.17 训练模型:计算参数向量的梯度................................................................................. 40

2.18 损失/目标函数................................................................................................................ 43

2.19 梯度下降、SGD...............................................................................................................43

研究热点 2 词语义项的线性代数结构与词义消歧..................................................................... 45

2.1 复原....................................................................................................................................46

2.2 复原结果............................................................................................................................46

2.3 量化评测............................................................................................................................46

2.4 总结....................................................................................................................................47

笔记 3 高级词向量表示..................................................................................................................48

3.1 复习:word2vec 的主要思路...........................................................................................48

3.2 SGD 与词向量..................................................................................................................48

3.3 近似:负采样....................................................................................................................49

3.4 negative sampling 和 skip-gram...................................................................................... 49

3.5 其他方法............................................................................................................................50

3.6 基于窗口的共现矩阵........................................................................................................50

3.7 朴素共现向量的问题........................................................................................................51

3.8 解决办法:低维向量........................................................................................................51

3.9 改进....................................................................................................................................52

3.10 效果..................................................................................................................................52

3.11 SVD 的问题.................................................................................................................... 54

3.12 Count based vs direct prediction...................................................................................54

3.13 综合两者优势:GloVe....................................................................................................54

3.14 评测方法..........................................................................................................................55

3.15 Intrinsic word vector evaluation.................................................................................... 55

3.16 结果对比..........................................................................................................................57

3.17 调参..................................................................................................................................57

3.18 另一个数据集..................................................................................................................59

3.19 Extrinsic word vector evaluation................................................................................... 60

笔记 4 Word Window 分类与神经网络.......................................................................................62

4.1 分类问题............................................................................................................................62

4.2 softmax 详细....................................................................................................................62

4.3 softmax 与交叉熵误差....................................................................................................62

4.4 优化....................................................................................................................................63

4.5 re-training 词向量失去泛化效果................................................................................... 64

4.6 Window classification...................................................................................................... 65

4.6 最简单的分类器:softmax.............................................................................................. 65

4.7 softmax(等价于逻辑斯谛回归)效果有限................................................................ 66

4.8 使用神经网络....................................................................................................................67

4.9 从逻辑斯谛回归到神经网络........................................................................................... 67

4.10 为什么需要非线性..........................................................................................................704.11 前向传播网络..................................................................................................................71

4.12 间隔最大化目标函数......................................................................................................71

4.13 反向传播训练..................................................................................................................72

研究热点 3 高效文本分类的锦囊妙计..........................................................................................74

3.1 Facebook 的 fastText .......................................................................................................74

3.2 词袋模型............................................................................................................................74

3.3 简单的线性模型................................................................................................................75

3.4 训练....................................................................................................................................75

3.5 Hierarchical softmax........................................................................................................ 76

3.6 效果与速度........................................................................................................................76

3.7 总结....................................................................................................................................77

笔记 5 反向传播与项目指导..........................................................................................................78

5.1 任意层的通用公式............................................................................................................78

5.2 反向传播的电路解释........................................................................................................78

5.3 第三种理解:流程图........................................................................................................82

5.4 第四种解释:实际神经网络中的误差信号................................................................... 86

5.5 课程项目............................................................................................................................87

研究热点 4 词嵌入对传统方法的启发..........................................................................................88

4.1 词语表示方法....................................................................................................................88

4.2 Skip-Gram 中的超参数....................................................................................................89

4.3 对 PMI 的启发................................................................................................................... 89

4.4 可调超参数一览表............................................................................................................90

4.5 调参结果............................................................................................................................90

4.6 结论....................................................................................................................................91

笔记 6 句法分析..............................................................................................................................92

6.1 语言学的两种观点............................................................................................................92

6.2 歧义....................................................................................................................................92

6.3 依附歧义............................................................................................................................93

6.4 标注数据集的崛起:Universal Dependencies 6.5 treebanks......................................... 93

6.6 依存文法与依存结构........................................................................................................94

6.7 起源....................................................................................................................................95

6.8 一些细节............................................................................................................................96

6.9 句法分析可用的特征........................................................................................................96

6.10 依存句法分析..................................................................................................................96

6.11 Arc-standard transition.................................................................................................. 96

6.12 MaltParser......................................................................................................................97

6.13 传统特征表示..................................................................................................................97

6.14 效果评估..........................................................................................................................97

6.15 投射性..............................................................................................................................98

6.16 为什么需要神经网络句法分析器................................................................................. 98

6.17 神经网络依存句法分析器............................................................................................. 99

6.18 为何需要非线性............................................................................................................100

6.19 未来工作........................................................................................................................101

Assignment 1................................................................................................................................... 1021.1 Softmax.......................................................................................................................... 102

1.1.1 softmax 常数不变性.......................................................................................... 102

1.1.2 Python 实现........................................................................................................102

1.2 神经网络基础..................................................................................................................103

1.2.1 sigmoid 梯度.......................................................................................................103

1.2.2 交叉熵损失函数的梯度...................................................................................... 104

1.2.3 推导三层网络的梯度.......................................................................................... 105

1.2.4 参数数量...............................................................................................................106

1.2.5 实现 sigmoid 函数................................................................................................106

1.2.6 实现梯度检查.......................................................................................................107

1.2.7 实现前向传播和反向传播.................................................................................. 108

研究热点 5 图像对话....................................................................................................................110

5.1 相关工作..........................................................................................................................110

5.2 图像视频自动标题..........................................................................................................110

5.3 图像语义对齐..................................................................................................................111

5.4 图像 QA............................................................................................................................111

5.5 贡献..................................................................................................................................112

5.6 技术细节..........................................................................................................................112

5.7 数据集..............................................................................................................................113

5.8 结果..................................................................................................................................113

笔记 7 TensorFlow 入门.............................................................................................................. 115

7.1 深度学习框架简介..........................................................................................................115

7.2 TF 是什么.......................................................................................................................115

7.3 图计算编程模型..............................................................................................................116

7.4 图在哪里..........................................................................................................................117

7.5 如何运行..........................................................................................................................118

7.6 训练..................................................................................................................................118

7.7 如何计算梯度..................................................................................................................118

7.8 变量共享..........................................................................................................................119

7.9 总结..................................................................................................................................119

7.10 现场演示........................................................................................................................120

研究热点 6 基于转移的神经网络句法分析的结构化训练....................................................... 121

6.1 什么是 SyntaxNet............................................................................................................ 121

6.2 项贡献..............................................................................................................................121

6.3 Tri-Training:利用未标注数据..................................................................................... 121

6.4 模型改进..........................................................................................................................122

6.5 结构化感知机训练与柱搜索......................................................................................... 122

6.6 结论...................................................................................................................................123

笔记 8 RNN 和语言模型............................................................................................................. 125

8.1 语言模型..........................................................................................................................125

8.2 传统语言模型..................................................................................................................125

8.3 Recurrent Neural Networks........................................................................................... 126

8.4 损失函数..........................................................................................................................128

8.5 训练 RNN 很难................................................................................................................ 1288.6 梯度消失实例..................................................................................................................130

8.7 防止梯度爆炸..................................................................................................................132

8.8 减缓梯度消失..................................................................................................................132

8.9 困惑度结果......................................................................................................................133

8.10 问题:softmax 太大了................................................................................................. 133

8.11 最后的实现技巧............................................................................................................134

8.12 序列模型的应用............................................................................................................134

8.13 Bidirectional RNNs....................................................................................................... 135

8.14 Deep Bidirectional RNNs..............................................................................................135

8.15 评测................................................................................................................................136

8.16 应用:RNN 机器翻译模型........................................................................................... 136

8.17 回顾................................................................................................................................138

研究热点 7 迈向更好的语言模型................................................................................................139

7.1 更好的输入......................................................................................................................139

7.2 更好的正则化和预处理..................................................................................................140

7.3 更好的模型?..................................................................................................................140

笔记 9 机器翻译和高级 LSTM 及 GRU........................................................................................ 142

9.1 机器翻译..........................................................................................................................142

9.2 传统统计机器翻译系统..................................................................................................142

9.3 第一步:对齐..................................................................................................................143

9.4 对齐之后..........................................................................................................................145

9.5 解码:在海量假设中搜索最佳选择............................................................................. 146

9.6 传统机器翻译..................................................................................................................146

9.7 深度学习来救场..............................................................................................................146

9.8 主要改进:更好的单元..................................................................................................150

9.9 LSTM(

Long-Short-Term-Memories)......................................................................... 151

9.10 一些可视化....................................................................................................................153

9.11 LSTM 很潮....................................................................................................................154

9.12 深度 LSTM 用于机器翻译.............................................................................................156

9.13 进一步改进:更多门!............................................................................................... 157

9.14 RNN 的最新改进......................................................................................................... 157

9.15 softmax 的问题:无法出新词................................................................................... 157

9.16 用指针来解决问题........................................................................................................157

9.17 总结................................................................................................................................159

Assignment 2................................................................................................................................... 160

2.1 Tensorflow Softmax........................................................................................................160

2.1.1 softmax................................................................................................................160

2.1.2 交叉熵...................................................................................................................161

2.1.3 Placeholders & Feed Dictionaries.......................................................................161

2.1.4 Softmax & CE Loss...............................................................................................161

2.1.5 Training Optimizer...............................................................................................162

研究热点 8 谷歌的多语种神经网络翻译系统........................................................................... 164

8.1 双语 NMT.........................................................................................................................164

8.2 土办法..............................................................................................................................1648.3 Google 的多语种 NMT 系统.........................................................................................165

8.4 架构..................................................................................................................................166

8.5 效果..................................................................................................................................167

8.6 Zero-Shot Translation.....................................................................................................167

笔记 10 NMT 与 Attention.......................................................................................................... 168

10.1 机器翻译........................................................................................................................168

10.2 机器翻译的需求............................................................................................................168

10.3 什么是 NMT...................................................................................................................168

10.4 架构................................................................................................................................168

10.5 NMT:青铜时代.............................................................................................................168

10.6 现代 NMT 模型..............................................................................................................170

10.7 RNN Encoder................................................................................................................ 171

10.8 Decoder:递归语言模型............................................................................................172

10.9 MT 的发展................................................................................................................... 172

10.10 NMT 的四大优势.......................................................................................................172

10.11 统计/神经网络机器翻译............................................................................................173

10.12 NMT 主要由工业界促进.......................................................................................... 173

10.13 介绍 Attention............................................................................................................. 174

10.14 Attention 机制........................................................................................................... 174

10.15 词语对齐......................................................................................................................174

10.16 同时学习翻译和对齐................................................................................................. 175

10.17 打分..............................................................................................................................176

10.18 更多 attention!覆盖范围......................................................................................... 180

10.19 Doubly attention........................................................................................................ 180

10.20 用旧模型的语言学思想拓展 attention..................................................................... 181

10.21 decoder...................................................................................................................... 181

10.22 Ancestral sampling.....................................................................................................182

10.23 Greedy Search............................................................................................................182

10.24 Beam search...............................................................................................................183

10.25 效果对比......................................................................................................................183

研究热点 9 读唇术........................................................................................................................185

9.1 唇语翻译..........................................................................................................................185

9.2 架构..................................................................................................................................186

9.3 视觉..................................................................................................................................187

9.4 听觉..................................................................................................................................187

9.5 Attention 与 Spell...........................................................................................................188

9.6 Curriculum Learning.......................................................................................................188

9.7 Scheduled Sampling.......................................................................................................189

9.8 数据集..............................................................................................................................189

9.9 结果..................................................................................................................................190

笔记 11 GRU 和 NMT 的进一步话题......................................................................................... 191

11.1 深入 GRU .......................................................................................................................191

11.2 Update Gate................................................................................................................. 191

11.3 Reset Gate.................................................................................................................... 19111.4 GRU 寄存器................................................................................................................. 192

11.5 GRU 和 LSTM 对比...................................................................................................... 192

11.6 深入 LSTM......................................................................................................................193

11.7 训练技巧........................................................................................................................198

11.8 Ensemble......................................................................................................................198

11.9 MT 评测....................................................................................................................... 198

11.10 BLEU........................................................................................................................... 199

11.11 Brevity Penalty........................................................................................................... 199

11.12 Multiple Reference Translations................................................................................ 200

11.13 解决大词表问题..........................................................................................................202

11.14 Large-vocab NMT....................................................................................................... 203

11.15 训练..............................................................................................................................203

11.16 测试..............................................................................................................................204

11.17 更多技巧......................................................................................................................204

11.18 Byte Pair Encoding..................................................................................................... 204

11.19 其他..............................................................................................................................205

笔记 12 语音识别的 end-to-end 模型......................................................................................... 208

12.1 Automatic Speech Recognition(

ASR)..................................................................... 208

12.2 传统 ASR........................................................................................................................ 208

12.3 近现代 ASR.................................................................................................................... 208

12.4 end-to-end ASR............................................................................................................ 209

12.5 Connectionist Temporal Classification.........................................................................209

12.6 一些效果........................................................................................................................210

12.7 sequence to sequence speech recognition with attention.........................................211

12.8 Listen Attend and Spell................................................................................................ 212

12.9 效果................................................................................................................................214

12.10 LAS 的限制.................................................................................................................214

12.11 在线 seq2seq 模型...................................................................................................... 215

12.12 Neural Transducer......................................................................................................215

12.13 结果..............................................................................................................................217

12.14 Encoder 中的卷积..................................................................................................... 218

12.15 目标颗粒度..................................................................................................................219

12.16 效果..............................................................................................................................220

12.17 模型缺点......................................................................................................................221

12.18 解决办法......................................................................................................................221

12.19 另一个缺点..................................................................................................................222

12.20 Better Language Model Blending.............................................................................. 223

12.21 Better Sequence Training...........................................................................................223

12.22 机会..............................................................................................................................223

12.23 多音源..........................................................................................................................224

12.24 "同声传译"....................................................................................................................224

Assignment 3................................................................................................................................... 226

3.1 命名实体识别初步..........................................................................................................226

3.2 A window into NER.........................................................................................................2263.2.1 概念.......................................................................................................................227

3.2.2 维度和复杂度.......................................................................................................229

3.2.3 实现基线模型.......................................................................................................229

3.2.4 分析结果...............................................................................................................230

研究热点 10 Character-Aware 神经网络语言模型...................................................................232

10.1 动机................................................................................................................................232

10.2 架构................................................................................................................................232

10.3 卷积层............................................................................................................................233

10.4 Highway Network.........................................................................................................233

10.5 LSTM.............................................................................................................................234

10.6 量化结果........................................................................................................................234

10.7 直观效果........................................................................................................................235

10.8 结论................................................................................................................................236

10.9 实现................................................................................................................................236

CS224n 笔记 13 卷积神经网络.................................................................................................... 237

13.1 从 RNN 到 CNN..............................................................................................................237

13.2 什么是卷积....................................................................................................................237

13.3 单层 CNN....................................................................................................................... 238

13.4 池化................................................................................................................................239

13.5 分类................................................................................................................................239

13.6 图示................................................................................................................................239

13.7 dropout.........................................................................................................................240

13.8 试验结果........................................................................................................................240

13.9 CNN 花样..................................................................................................................... 241

13.10 CNN 应用:机器翻译............................................................................................... 242

13.11 模型比较......................................................................................................................242

13.12 Quasi-RNN..................................................................................................................243

研究热点 11 深度强化学习用于对话生成................................................................................. 245

11.1 seq2seq 的缺陷 .......................................................................................................... 245

11.2 如何定义好的回复........................................................................................................246

11.3 强化学习........................................................................................................................246

11.4 量化结果........................................................................................................................247

11.5 直观效果........................................................................................................................247

11.6 结论................................................................................................................................248

笔记 14 Tree RNN 与短语句法分析........................................................................................... 249

14.1 语言模型光谱 ...............................................................................................................249

14.2 语言的语义解释——并不只是词向量....................................................................... 249

14.3 语义合成性....................................................................................................................250

14.4 语言能力........................................................................................................................251

14.5 语言是递归的吗............................................................................................................251

14.6 在词向量空间模型上表示语义................................................................................... 252

14.7 如何将短语映射到向量空间....................................................................................... 252

14.8 短语结构分析:目的....................................................................................................253

14.9 Recursive vs. recurrent neural networks..................................................................... 25414.10 从 RNNs 到 CNNs.........................................................................................................255

14.11 Recursive Neural Networks 用于结构化预测.......................................................... 255

14.12 最简单的 Recursive Neural Network.......................................................................... 256

14.13 用 RNN 分析句子........................................................................................................ 256

14.14 最大间隔......................................................................................................................258

14.15 结构上的反向传播..................................................................................................... 258

14.16 简单 RNN 的缺点........................................................................................................ 259

研究热点 12 神经网络自动代码摘要......................................................................................... 261

12.1 任务与数据集 ...............................................................................................................261

12.2 子任务 ..........................................................................................................................261

12.3 网络架构 .......................................................................................................................262

12.4 结果................................................................................................................................263

12.5 量化评测........................................................................................................................263

12.6 直观效果........................................................................................................................263

笔记 15 指代消解..........................................................................................................................265

15.1 什么是指代消解 ...........................................................................................................265

15.2 应用................................................................................................................................265

15.3 指代消解评测................................................................................................................266

15.4 指代的类型....................................................................................................................266

15.5 不是所有 NP 都在指代.................................................................................................266

15.6 Coreference, anaphors, cataphors...............................................................................267

15.7 共指与回指....................................................................................................................267

15.7 传统代词消解方法:Hobbs’naive algorithm........................................................... 268

15.8 基于知识库的代词消解............................................................................................... 268

15.9 几种指代消解模型........................................................................................................268

15.10 监督 Mention-Pair Model............................................................................................268

15.11 指代消解可用特征..................................................................................................... 269

15.12 神经网络指代消解模型............................................................................................. 269

研究热点 13 学习代码的语义......................................................................................................270

13.1 表示代码 .......................................................................................................................270

13.2 编码解码状态................................................................................................................271

13.3 目标函数........................................................................................................................272

13.4 利用 RecursiveNN 来生成程序 embeddings................................................................272

13.5 总结................................................................................................................................272

13.6 未来工作........................................................................................................................273

笔记 16 DMN 与问答系统.......................................................................................................... 274

16.1 是否所有 NLP 任务都可视作 QA?.............................................................................274

16.2 前无古人........................................................................................................................274

16.3 全才难得........................................................................................................................274

16.4 Dynamic Memory Networks........................................................................................275

16.5 回答难题........................................................................................................................275

16.6 Dynamic Memory Networks........................................................................................275

16.7 The Modules: Input......................................................................................................276

16.8 The Modules: Question................................................................................................27716.9 The Modules: Episodic Memory..................................................................................277

16.10 The Modules: Answer................................................................................................ 279

16.11 相关工作......................................................................................................................279

16.12 与 MemNets 比较....................................................................................................... 279

研究热点 14 自动组合神经网络做问答系统............................................................................. 281

14.1 四个 Jointly 训练的组件............................................................................................... 281

14.2 模型:在两个分布上构建........................................................................................... 282

14.3 Layout Model............................................................................................................... 282

14.4 Layout Scoring Model.................................................................................................. 284

14.5 Execution Model.......................................................................................................... 284

14.5 Module: lookup............................................................................................................285

14.6 Module: relate..............................................................................................................285

14.7 Module: find.................................................................................................................286

14.8 Module: and.................................................................................................................286

14.9 训练 Execution Model................................................................................................... 287

14.10 结果..............................................................................................................................287

14.11 VQA............................................................................................................................ 287

14.12 GeoQA........................................................................................................................ 288

笔记 17 NLP 存在的问题与未来的架构....................................................................................... 289

17.1 新时代人们正在“解决”语言 .................................................................................. 289

17.2 旧时代的热血................................................................................................................289

17.3 基础 NLP:在进步........................................................................................................ 290

17.4 我们还需要什么............................................................................................................291

17.5 Recursive Neural Networks 用于意识形态检测........................................................ 292

17.6 TreeRNN....................................................................................................................... 293

17.7 recurrent NN 训练快................................................................................................... 293

17.8 TreeRNN 结构取决于输入.......................................................................................... 294

17.9 The Shift-reduce Parser-Interpreter NN (SPINN).........................................................294

17.10 binary trees = transition sequences.......................................................................... 294

17.11 架构..............................................................................................................................295

研究热点 15 Neural Turing Machines ........................................................................................ 296

15.1 问题 ...............................................................................................................................296

15.2 记忆是解决方案吗........................................................................................................297

15.3 Neural Turing Machines...............................................................................................297

15.4 如何读写........................................................................................................................300

15.5 读内存............................................................................................................................300

15.6 写内存............................................................................................................................301

15.7 attention 更新..............................................................................................................301

15.8 第一步............................................................................................................................301

15.9 第二步............................................................................................................................302

15.10 第三步..........................................................................................................................302

15.11 效果..............................................................................................................................303

15.12 References..................................................................................................................303

笔记 18 挑战深度学习与自然语言处理的极限......................................................................... 30418.1 障碍 1:通用架构 ........................................................................................................304

18.2 障碍 2:联合多任务学习............................................................................................ 305

18.2.1 解决方案..............................................................................................................305

18.2.2 模型细节..............................................................................................................306

18.2.3 依存句法分析..................................................................................................... 307

18.2.4 语义联系..............................................................................................................307

18.2.5 训练......................................................................................................................308

18.2.6 结果......................................................................................................................308

18.3 障碍 3:预测从未见过的词语.................................................................................... 309

好例子网口号:伸出你的我的手 — 分享!

小贴士

感谢您为本站写下的评论,您的评论对其它用户来说具有重要的参考价值,所以请认真填写。

- 类似“顶”、“沙发”之类没有营养的文字,对勤劳贡献的楼主来说是令人沮丧的反馈信息。

- 相信您也不想看到一排文字/表情墙,所以请不要反馈意义不大的重复字符,也请尽量不要纯表情的回复。

- 提问之前请再仔细看一遍楼主的说明,或许是您遗漏了。

- 请勿到处挖坑绊人、招贴广告。既占空间让人厌烦,又没人会搭理,于人于己都无利。

关于好例子网

本站旨在为广大IT学习爱好者提供一个非营利性互相学习交流分享平台。本站所有资源都可以被免费获取学习研究。本站资源来自网友分享,对搜索内容的合法性不具有预见性、识别性、控制性,仅供学习研究,请务必在下载后24小时内给予删除,不得用于其他任何用途,否则后果自负。基于互联网的特殊性,平台无法对用户传输的作品、信息、内容的权属或合法性、安全性、合规性、真实性、科学性、完整权、有效性等进行实质审查;无论平台是否已进行审查,用户均应自行承担因其传输的作品、信息、内容而可能或已经产生的侵权或权属纠纷等法律责任。本站所有资源不代表本站的观点或立场,基于网友分享,根据中国法律《信息网络传播权保护条例》第二十二与二十三条之规定,若资源存在侵权或相关问题请联系本站客服人员,点此联系我们。关于更多版权及免责申明参见 版权及免责申明

网友评论

我要评论