实例介绍

【实例截图】

【核心代码】

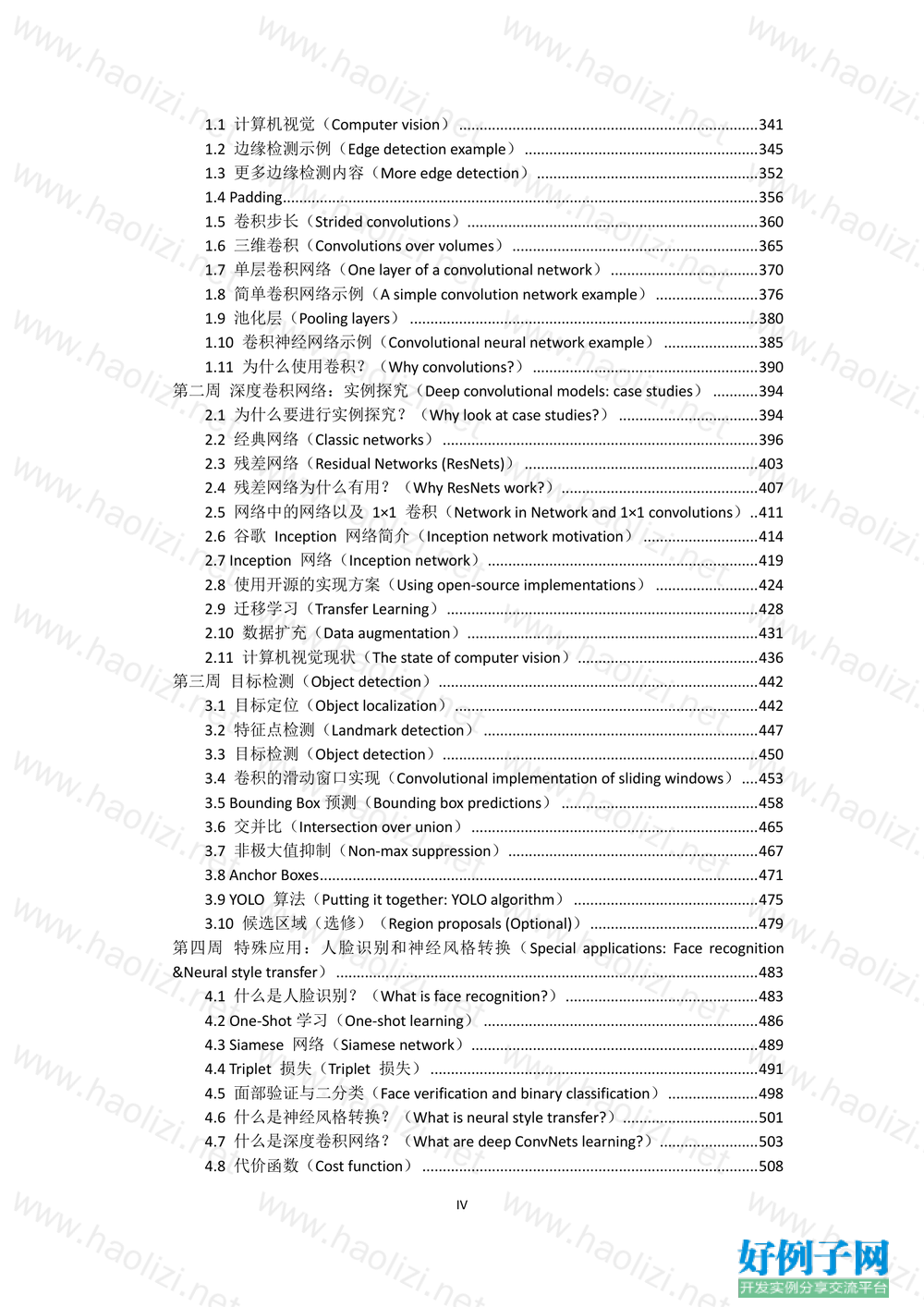

目录 第一门课 神经网络和深度学习(Neural Networks and Deep Learning)........................................1 第一周:深度学习引言(Introduction to Deep Learning)........................................................1 1.1 欢迎(Welcome)..........................................................................................................1 1.2 什么是神经网络?(What is a Neural Network)........................................................4 1.3 神经网络的监督学习(Supervised Learning with Neural Networks).........................8 1.4 为什么深度学习会兴起?(Why is Deep Learning taking off?) ..............................12 1.5 关于这门课(About this Course) ..............................................................................16 1.6 课程资源(Course Resources)...................................................................................17 第二周:神经网络的编程基础(Basics of Neural Network programming)...........................18 2.1 二分类(Binary Classification)...................................................................................18 2.2 逻辑回归(Logistic Regression).................................................................................22 2.3 逻辑回归的代价函数(Logistic Regression Cost Function) ................................24 2.4 梯度下降法(Gradient Descent)..........................................................................26 2.5 导数(Derivatives)................................................................................................30 2.6 更多的导数例子(More Derivative Examples)....................................................32 2.7 计算图(Computation Graph)..............................................................................35 2.8 计算图的导数计算(Derivatives with a Computation Graph)............................36 2.9 逻辑回归中的梯度下降(Logistic Regression Gradient Descent) ......................42 2.10 m 个样本的梯度下降(Gradient Descent on m Examples)....................................45 2.11 向量化(Vectorization)............................................................................................48 2.12 向量化的更多例子(More Examples of Vectorization) ....................................52 2.13 向量化逻辑回归(Vectorizing Logistic Regression)................................................55 2.14 向量化 logistic 回归的梯度输出(Vectorizing Logistic Regression's Gradient) .........................................................................................................................................58 2.15 Python 中的广播(Broadcasting in Python)......................................................61 2.16 关于 python _ numpy 向量的说明(A note on python or numpy vectors)参考 视频:.............................................................................................................................65 2.17 Jupyter/iPython Notebooks 快速入门(Quick tour of Jupyter/iPython Notebooks) .........................................................................................................................................69 2.18 (选修)logistic 损失函数的解释(Explanation of logistic regression cost function) .........................................................................................................................................73 第三周:浅层神经网络(Shallow neural networks)...............................................................77 3.1 神经网络概述(Neural Network Overview)........................................................77 3.2 神经网络的表示(Neural Network Representation)...........................................80 3.3 计算一个神经网络的输出(Computing a Neural Network's output) ................83 3.4 多样本向量化(Vectorizing across multiple examples) ......................................86 3.5 向量化实现的解释(Justification for vectorized implementation).....................89 3.6 激活函数(Activation functions) .........................................................................91 3.7 为什么需要非线性激活函数?(why need a nonlinear activation function?)..94 3.8 激活函数的导数(Derivatives of activation functions).......................................96 3.9 神经网络的梯度下降(Gradient descent for neural networks)..........................98 II 3.10(选修)直观理解反向传播(Backpropagation intuition)..............................100 3.11 随机初始化(Random Initialization) ..............................................................102 第四周:深层神经网络(Deep Neural Networks)................................................................104 4.1 深层神经网络(Deep L-layer neural network)..................................................104 4.2 前向传播和反向传播(Forward and backward propagation) ..........................106 4.3 深层网络中的前向传播(Forward propagation in a Deep Network)...............109 4.4 核对矩阵的维数(Getting your matrix dimensions right) ................................110 4.5 为什么使用深层表示?(Why deep representations?)...................................112 4.6 搭建神经网络块(Building blocks of deep neural networks) ...........................116 4.7 参数 VS 超参数(Parameters vs Hyperparameters)..........................................119 4.8 深度学习和大脑的关联性(What does this have to do with the brain?)........122 第二门课 改善深层神经网络:超参数调试、正则化以及优化(Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization).........................................124 第一周:深度学习的实用层面(Practical aspects of Deep Learning) .................................124 1.1 训练,验证,测试集(Train / Dev / Test sets) .................................................124 1.2 偏差,方差(Bias /Variance).............................................................................129 1.3 机器学习基础(Basic Recipe for Machine Learning).........................................134 1.4 正则化(Regularization).....................................................................................136 1.5 为什么正则化有利于预防过拟合呢?(Why regularization reduces overfitting?) .......................................................................................................................................140 1.6 dropout 正则化(Dropout Regularization) ........................................................144 1.7 理解 dropout(Understanding Dropout) ..........................................................148 1.8 其他正则化方法(Other regularization methods)............................................151 1.9 归一化输入(Normalizing inputs) .....................................................................155 1.10 梯度消失/梯度爆炸(Vanishing / Exploding gradients)..................................159 1.11 神经网络的权重初始化(Weight Initialization for Deep NetworksVanishing / Exploding gradients)...................................................................................................161 1.12 梯度的数值逼近(Numerical approximation of gradients).............................164 1.13 梯度检验(Gradient checking).........................................................................166 1.14 梯度检验应用的注意事项(Gradient Checking Implementation Notes).......169 第二周:优化算法 (Optimization algorithms) ...................................................................171 2.1 Mini-batch 梯度下降(Mini-batch gradient descent)........................................171 2.2 理解 mini-batch 梯度下降法(Understanding mini-batch gradient descent) ..176 2.3 指数加权平均数(Exponentially weighted averages).......................................180 2.4 理解指数加权平均数(Understanding exponentially weighted averages) ......184 2.5 指数加权平均的偏差修正(Bias correction in exponentially weighted averages) .......................................................................................................................................189 2.6 动量梯度下降法(Gradient descent with Momentum)....................................191 2.7 RMSprop..................................................................................................................195 2.8 Adam 优化算法(Adam optimization algorithm)....................................................198 2.9 学习率衰减(Learning rate decay)..........................................................................201 2.10 局部最优的问题(The problem of local optima)..................................................204 第三周 超参数调试、Batch 正则化和程序框架(Hyperparameter tuning).................207 3.1 调试处理(Tuning process)................................................................................207 III 3.2 为超参数选择合适的范围(Using an appropriate scale to pick hyperparameters) .......................................................................................................................................211 3.3 超参数训练的实践:Pandas VS Caviar(Hyperparameters tuning in practice: Pandas vs. Caviar) ...................................................................................................................215 3.4 归一化网络的激活函数(Normalizing activations in a network).....................219 3.5 将 Batch Norm 拟合进神经网络(Fitting Batch Norm into a neural network) .......................................................................................................................................223 3.6 Batch Norm 为什么奏效?(Why does Batch Norm work?).............................228 3.7 测试时的 Batch Norm(Batch Norm at test time)............................................233 3.8 Softmax 回归(Softmax regression)...................................................................235 3.9 训练一个 Softmax 分类器(Training a Softmax classifier)..............................240 3.10 深度学习框架(Deep Learning frameworks) ..................................................245 3.11 TensorFlow ............................................................................................................247 第三门课 结构化机器学习项目(Structuring Machine Learning Projects)...........................254 第一周 机器学习(ML)策略(1)(ML strategy(1)).............................................254 1.1 为什么是 ML 策略?(Why ML Strategy?) .......................................................254 1.2 正交化(Orthogonalization)...............................................................................256 1.3 单一数字评估指标(Single number evaluation metric) ...................................260 1.4 满足和优化指标(Satisficing and optimizing metrics)......................................264 1.5 训练/开发/测试集划分(Train/dev/test distributions) ....................................267 1.6 开发集和测试集的大小(Size of dev and test sets) .........................................271 1.7 什么时候该改变开发/测试集和指标?(When to change dev/test sets and metrics) .......................................................................................................................................273 1.8 为什么是人的表现?(Why human-level performance?)................................278 1.9 可避免偏差(Avoidable bias) ............................................................................280 1.10 理解人的表现(Understanding human-level performance)...........................283 1.11 超过人的表现(Surpassing human- level performance).................................288 1.12 改善你的模型的表现(Improving your model performance) ........................291 第二周:机器学习策略(2)(ML Strategy (2))..................................................................293 2.1 进行误差分析(Carrying out error analysis).....................................................293 2.2 清楚标注错误的数据(Cleaning up Incorrectly labeled data)..........................297 2.3 快速搭建你的第一个系统,并进行迭代(Build your first system quickly, then iterate)........................................................................................................................302 2.4 在不同的划分上进行训练并测试(Training and testing on different distributions) .......................................................................................................................................305 2.5 不匹配数据划分的偏差和方差( Bias and Variance with mismatched data distributions) ..............................................................................................................310 2.6 定位数据不匹配(Addressing data mismatch) .................................................317 2.7 迁移学习(Transfer learning).............................................................................321 2.8 多任务学习(Multi-task learning).....................................................................325 2.9 什么是端到端的深度学习?(What is end-to-end deep learning?)................331 2.10 是否要使用端到端的深度学习?(Whether to use end-to-end learning?)..337 第四门课 卷积神经网络(Convolutional Neural Networks) ..................................................341 第一周 卷积神经网络(Foundations of Convolutional Neural Networks) .....................341 IV 1.1 计算机视觉(Computer vision).........................................................................341 1.2 边缘检测示例(Edge detection example).........................................................345 1.3 更多边缘检测内容(More edge detection)......................................................352 1.4 Padding....................................................................................................................356 1.5 卷积步长(Strided convolutions).......................................................................360 1.6 三维卷积(Convolutions over volumes)............................................................365 1.7 单层卷积网络(One layer of a convolutional network)....................................370 1.8 简单卷积网络示例(A simple convolution network example).........................376 1.9 池化层(Pooling layers) .....................................................................................380 1.10 卷积神经网络示例(Convolutional neural network example) .......................385 1.11 为什么使用卷积?(Why convolutions?).......................................................390 第二周 深度卷积网络:实例探究(Deep convolutional models: case studies) ...........394 2.1 为什么要进行实例探究?(Why look at case studies?) ..................................394 2.2 经典网络(Classic networks).............................................................................396 2.3 残差网络(Residual Networks (ResNets)) .........................................................403 2.4 残差网络为什么有用?(Why ResNets work?)................................................407 2.5 网络中的网络以及 1×1 卷积(Network in Network and 1×1 convolutions)..411 2.6 谷歌 Inception 网络简介(Inception network motivation) ............................414 2.7 Inception 网络(Inception network)..................................................................419 2.8 使用开源的实现方案(Using open-source implementations) .........................424 2.9 迁移学习(Transfer Learning)............................................................................428 2.10 数据扩充(Data augmentation).......................................................................431 2.11 计算机视觉现状(The state of computer vision)............................................436 第三周 目标检测(Object detection)..............................................................................442 3.1 目标定位(Object localization)..........................................................................442 3.2 特征点检测(Landmark detection) ...................................................................447 3.3 目标检测(Object detection).............................................................................450 3.4 卷积的滑动窗口实现(Convolutional implementation of sliding windows)....453 3.5 Bounding Box 预测(Bounding box predictions) ................................................458 3.6 交并比(Intersection over union)......................................................................465 3.7 非极大值抑制(Non-max suppression).............................................................467 3.8 Anchor Boxes...........................................................................................................471 3.9 YOLO 算法(Putting it together: YOLO algorithm).............................................475 3.10 候选区域(选修)(Region proposals (Optional)).........................................479 第四周 特殊应用:人脸识别和神经风格转换(Special applications: Face recognition &Neural style transfer).......................................................................................................483 4.1 什么是人脸识别?(What is face recognition?)...............................................483 4.2 One-Shot 学习(One-shot learning) ...................................................................486 4.3 Siamese 网络(Siamese network)......................................................................489 4.4 Triplet 损失(Triplet 损失)................................................................................491 4.5 面部验证与二分类(Face verification and binary classification)......................498 4.6 什么是神经风格转换?(What is neural style transfer?).................................501 4.7 什么是深度卷积网络?(What are deep ConvNets learning?)........................503 4.8 代价函数(Cost function)..................................................................................508 V 4.9 内容代价函数(Content cost function).............................................................510 4.10 风格代价函数(Style cost function)................................................................512 4.11 一维到三维推广(1D and 3D generalizations of models)...............................519 第五门课 序列模型(Sequence Models)......................................................................................525 第一周 循环序列模型(Recurrent Neural Networks).....................................................525 1.1 为什么选择序列模型?(Why Sequence Models?).........................................525 1.2 数学符号(Notation) .........................................................................................527 1.3 循环神经网络模型(Recurrent Neural Network Model)..................................530 1.4 通过时间的反向传播(Backpropagation through time)...................................536 1.5 不同类型的循环神经网络(Different types of RNNs)......................................539 1.6 语言模型和序列生成(Language model and sequence generation)................543 1.7 对新序列采样(Sampling novel sequences) .....................................................548 1.8 循环神经网络的梯度消失(Vanishing gradients with RNNs)...........................552 1.9 GRU 单元(Gated Recurrent Unit(GRU)).......................................................554 1.10 长短期记忆(LSTM(long short term memory)unit)...................................561 1.11 双向循环神经网络(Bidirectional RNN).........................................................567 1.12 深层循环神经网络(Deep RNNs) ...................................................................570 第二周 自然语言处理与词嵌入(Natural Language Processing and Word Embeddings) ..............................................................................................................................................572 2.1 词汇表征(Word Representation) .....................................................................572 2.2 使用词嵌入(Using Word Embeddings)............................................................576 2.3 词嵌入的特性(Properties of Word Embeddings) ............................................580 2.4 嵌入矩阵(Embedding Matrix)..........................................................................585 2.5 学习词嵌入(Learning Word Embeddings) .......................................................587 2.6 Word2Vec ................................................................................................................591 2.7 负采样(Negative Sampling)..............................................................................596 2.8 GloVe 词向量(GloVe Word Vectors).................................................................601 2.9 情感分类(Sentiment Classification).................................................................605 2.10 词嵌入除偏(Debiasing Word Embeddings) ...................................................608 第三周 序列模型和注意力机制(Sequence models & Attention mechanism).............614 3.1 序列结构的各种序列(Various sequence to sequence architectures).............614 3.2 选择最可能的句子(Picking the most likely sentence) ....................................617 3.3 集束搜索(Beam Search)...................................................................................620 3.4 改进集束搜索(Refinements to Beam Search) .................................................625 3.5 集束搜索的误差分析(Error analysis in beam search).....................................629 3.6 Bleu 得分(选修)(Bleu Score (optional))......................................................633 3.7 注意力模型直观理解(Attention Model Intuition) ..........................................638 3.8 注意力模型(Attention Model).........................................................................642 3.9 语音识别(Speech recognition).........................................................................646 3.10 触发字检测(Trigger Word Detection)............................................................650 3.11 结论和致谢(Conclusion and thank you)........................................................652 附件 ..............................................................................................................................................654 榜样的力量-吴恩达采访人工智能大师实录......................................................................654 吴恩达采访 Geoffery Hinton.......................................................................................654 VI 吴恩达采访 Ian Goodfellow........................................................................................664 吴恩达采访 Ruslan Salakhutdinov ..............................................................................670 吴恩达采访 Yoshua Bengio .........................................................................................676 吴恩达采访 林元庆.....................................................................................................683 吴恩达采访 Pieter Abbeel...........................................................................................687 吴恩达采访 Andrej Karpathy.......................................................................................692 深度学习符号指南(原课程翻译)...................................................................................698 机器学习的数学基础...........................................................................................................700 高等数学.......................................................................................................................700 线性代数.......................................................................................................................708 概率论和数理统计.......................................................................................................718

标签: Deeplearning learning 深度学习 AR EA

相关软件

小贴士

感谢您为本站写下的评论,您的评论对其它用户来说具有重要的参考价值,所以请认真填写。

- 类似“顶”、“沙发”之类没有营养的文字,对勤劳贡献的楼主来说是令人沮丧的反馈信息。

- 相信您也不想看到一排文字/表情墙,所以请不要反馈意义不大的重复字符,也请尽量不要纯表情的回复。

- 提问之前请再仔细看一遍楼主的说明,或许是您遗漏了。

- 请勿到处挖坑绊人、招贴广告。既占空间让人厌烦,又没人会搭理,于人于己都无利。

关于好例子网

本站旨在为广大IT学习爱好者提供一个非营利性互相学习交流分享平台。本站所有资源都可以被免费获取学习研究。本站资源来自网友分享,对搜索内容的合法性不具有预见性、识别性、控制性,仅供学习研究,请务必在下载后24小时内给予删除,不得用于其他任何用途,否则后果自负。基于互联网的特殊性,平台无法对用户传输的作品、信息、内容的权属或合法性、安全性、合规性、真实性、科学性、完整权、有效性等进行实质审查;无论平台是否已进行审查,用户均应自行承担因其传输的作品、信息、内容而可能或已经产生的侵权或权属纠纷等法律责任。本站所有资源不代表本站的观点或立场,基于网友分享,根据中国法律《信息网络传播权保护条例》第二十二与二十三条之规定,若资源存在侵权或相关问题请联系本站客服人员,点此联系我们。关于更多版权及免责申明参见 版权及免责申明

网友评论

我要评论