实例介绍

【实例简介】Deep Learning By Example A hands-on guide to implementing advanced machine learning algorithms and neural networks

Deep Learning By Example A hands-on guide to implementing advanced machine learning algorithms and neural networks

【实例截图】

【核心代码】

Table of Contents

Preface 1

Chapter 1: Data Science - A Birds' Eye View 9

Understanding data science by an example 10

Design procedure of data science algorithms 17

Data pre-processing 18

Data cleaning 18

Data pre-processing 19

Feature selection 19

Model selection 19

Learning process 20

Evaluating your model 20

Getting to learn 21

Challenges of learning 21

Feature extraction – feature engineering 21

Noise 21

Overfitting 21

Selection of a machine learning algorithm 22

Prior knowledge 22

Missing values 22

Implementing the fish recognition/detection model 23

Knowledge base/dataset 24

Data analysis pre-processing 25

Model building 28

Model training and testing 33

Fish recognition – all together 33

Different learning types 33

Supervised learning 34

Unsupervised learning 35

Semi-supervised learning 36

Reinforcement learning 37

Data size and industry needs 37

Table of Contents

[ ii ]

Summary 38

Chapter 2: Data Modeling in Action - The Titanic Example 39

Linear models for regression 39

Motivation 40

Advertising – a financial example 40

Dependencies 41

Importing data with pandas 41

Understanding the advertising data 43

Data analysis and visualization 43

Simple regression model 45

Learning model coefficients 45

Interpreting model coefficients 47

Using the model for prediction 47

Linear models for classification 49

Classification and logistic regression 51

Titanic example – model building and training 53

Data handling and visualization 54

Data analysis – supervised machine learning 60

Different types of errors 63

Apparent (training set) error 64

Generalization/true error 65

Summary 65

Chapter 3: Feature Engineering and Model Complexity – The Titanic

Example Revisited 66

Feature engineering 67

Types of feature engineering 67

Feature selection 68

Dimensionality reduction 68

Feature construction 68

Titanic example revisited 69

Missing values 70

Removing any sample with missing values in it 70

Missing value inputting 70

Assigning an average value 70

Using a regression or another simple model to predict the values of missing

variables 71

Feature transformations 72

Table of Contents

[ iii ]

Dummy features 72

Factorizing 73

Scaling 73

Binning 74

Derived features 74

Name 75

Cabin 76

Ticket 77

Interaction features 78

The curse of dimensionality 80

Avoiding the curse of dimensionality 82

Titanic example revisited – all together 83

Bias-variance decomposition 95

Learning visibility 98

Breaking the rule of thumb 98

Summary 98

Chapter 4: Get Up and Running with TensorFlow 100

TensorFlow installation 100

TensorFlow GPU installation for Ubuntu 16.04 101

Installing NVIDIA drivers and CUDA 8 101

Installing TensorFlow 104

TensorFlow CPU installation for Ubuntu 16.04 105

TensorFlow CPU installation for macOS X 106

TensorFlow GPU/CPU installation for Windows 108

The TensorFlow environment 108

Computational graphs 110

TensorFlow data types, variables, and placeholders 111

Variables 112

Placeholders 113

Mathematical operations 114

Getting output from TensorFlow 115

TensorBoard – visualizing learning 118

Summary 123

Chapter 5: TensorFlow in Action - Some Basic Examples 124

Capacity of a single neuron 125

Biological motivation and connections 125

Table of Contents

[ iv ]

Activation functions 127

Sigmoid 128

Tanh 128

ReLU 128

Feed-forward neural network 129

The need for multilayer networks 130

Training our MLP – the backpropagation algorithm 132

Step 1 – forward propagation 133

Step 2 – backpropagation and weight updation 134

TensorFlow terminologies – recap 135

Defining multidimensional arrays using TensorFlow 137

Why tensors? 139

Variables 140

Placeholders 141

Operations 143

Linear regression model – building and training 144

Linear regression with TensorFlow 145

Logistic regression model – building and training 149

Utilizing logistic regression in TensorFlow 149

Why use placeholders? 150

Set model weights and bias 151

Logistic regression model 151

Training 152

Cost function 152

Summary 157

Chapter 6: Deep Feed-forward Neural Networks - Implementing Digit

Classification 158

Hidden units and architecture design 158

MNIST dataset analysis 160

The MNIST data 161

Digit classification – model building and training 162

Data analysis 166

Building the model 169

Model training 174

Summary 179

Table of Contents

[ v ]

Chapter 7: Introduction to Convolutional Neural Networks 180

The convolution operation 180

Motivation 185

Applications of CNNs 186

Different layers of CNNs 186

Input layer 187

Convolution step 187

Introducing non-linearity 188

The pooling step 190

Fully connected layer 191

Logits layer 192

CNN basic example – MNIST digit classification 194

Building the model 196

Cost function 201

Performance measures 202

Model training 202

Summary 209

Chapter 8: Object Detection – CIFAR-10 Example 210

Object detection 210

CIFAR-10 – modeling, building, and training 211

Used packages 212

Loading the CIFAR-10 dataset 212

Data analysis and preprocessing 213

Building the network 218

Model training 222

Testing the model 227

Summary 230

Chapter 9: Object Detection – Transfer Learning with CNNs 231

Transfer learning 231

The intuition behind TL 232

Differences between traditional machine learning and TL 233

CIFAR-10 object detection – revisited 234

Solution outline 235

Loading and exploring CIFAR-10 236

Inception model transfer values 240

Table of Contents

[ vi ]

Analysis of transfer values 245

Model building and training 248

Summary 256

Chapter 10: Recurrent-Type Neural Networks - Language Modeling 257

The intuition behind RNNs 257

Recurrent neural networks architectures 258

Examples of RNNs 260

Character-level language models 260

Language model using Shakespeare data 262

The vanishing gradient problem 263

The problem of long-term dependencies 263

LSTM networks 265

Why does LSTM work? 266

Implementation of the language model 267

Mini-batch generation for training 269

Building the model 272

Stacked LSTMs 272

Model architecture 274

Inputs 274

Building an LSTM cell 275

RNN output 276

Training loss 277

Optimizer 277

Building the network 278

Model hyperparameters 279

Training the model 279

Saving checkpoints 281

Generating text 281

Summary 284

Chapter 11: Representation Learning - Implementing Word

Embeddings 285

Introduction to representation learning 286

Word2Vec 287

Building Word2Vec model 288

A practical example of the skip-gram architecture 292

Skip-gram Word2Vec implementation 293

Table of Contents

[ vii ]

Data analysis and pre-processing 296

Building the model 302

Training 304

Summary 309

Chapter 12: Neural Sentiment Analysis 310

General sentiment analysis architecture 310

RNNs – sentiment analysis context 313

Exploding and vanishing gradients - recap 316

Sentiment analysis – model implementation 317

Keras 317

Data analysis and preprocessing 318

Building the model 329

Model training and results analysis 331

Summary 334

Chapter 13: Autoencoders – Feature Extraction and Denoising 335

Introduction to autoencoders 336

Examples of autoencoders 337

Autoencoder architectures 338

Compressing the MNIST dataset 339

The MNIST dataset 339

Building the model 341

Model training 342

Convolutional autoencoder 345

Dataset 345

Building the model 346

Model training 348

Denoising autoencoders 351

Building the model 352

Model training 354

Applications of autoencoders 357

Image colorization 357

More applications 358

Summary 359

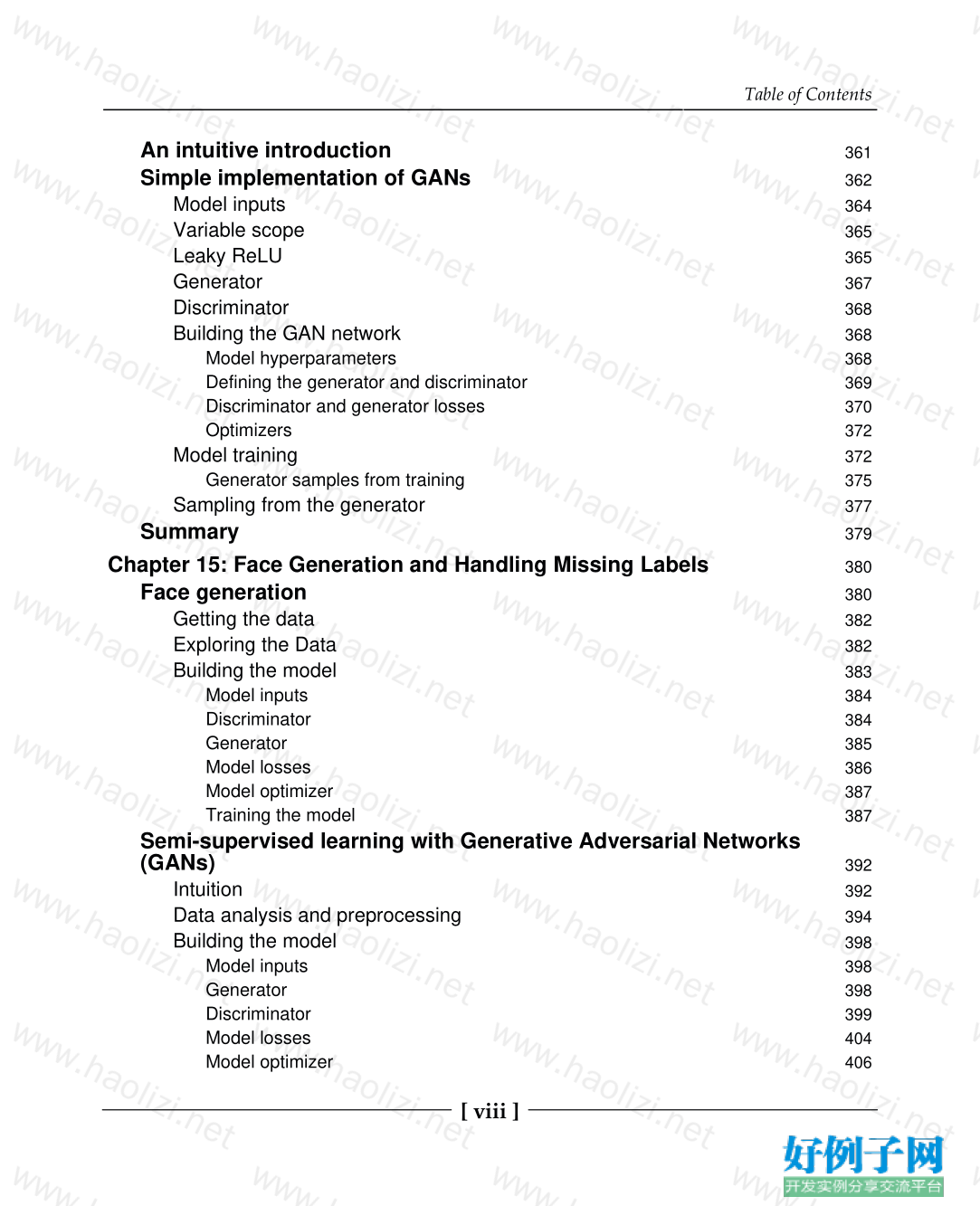

Chapter 14: Generative Adversarial Networks 360

Table of Contents

[ viii ]

An intuitive introduction 361

Simple implementation of GANs 362

Model inputs 364

Variable scope 365

Leaky ReLU 365

Generator 367

Discriminator 368

Building the GAN network 368

Model hyperparameters 368

Defining the generator and discriminator 369

Discriminator and generator losses 370

Optimizers 372

Model training 372

Generator samples from training 375

Sampling from the generator 377

Summary 379

Chapter 15: Face Generation and Handling Missing Labels 380

Face generation 380

Getting the data 382

Exploring the Data 382

Building the model 383

Model inputs 384

Discriminator 384

Generator 385

Model losses 386

Model optimizer 387

Training the model 387

Semi-supervised learning with Generative Adversarial Networks

(GANs) 392

Intuition 392

Data analysis and preprocessing 394

Building the model 398

Model inputs 398

Generator 398

Discriminator 399

Model losses 404

Model optimizer 406

Table of Contents

[ ix ]

Model training 407

Summary 411

????????: Implementing Fish Recognition 412

Code for fish recognition 412

Other Books You May Enjoy 418

Index 421

Deep Learning By Example A hands-on guide to implementing advanced machine learning algorithms and neural networks

小贴士

感谢您为本站写下的评论,您的评论对其它用户来说具有重要的参考价值,所以请认真填写。

- 类似“顶”、“沙发”之类没有营养的文字,对勤劳贡献的楼主来说是令人沮丧的反馈信息。

- 相信您也不想看到一排文字/表情墙,所以请不要反馈意义不大的重复字符,也请尽量不要纯表情的回复。

- 提问之前请再仔细看一遍楼主的说明,或许是您遗漏了。

- 请勿到处挖坑绊人、招贴广告。既占空间让人厌烦,又没人会搭理,于人于己都无利。

关于好例子网

本站旨在为广大IT学习爱好者提供一个非营利性互相学习交流分享平台。本站所有资源都可以被免费获取学习研究。本站资源来自网友分享,对搜索内容的合法性不具有预见性、识别性、控制性,仅供学习研究,请务必在下载后24小时内给予删除,不得用于其他任何用途,否则后果自负。基于互联网的特殊性,平台无法对用户传输的作品、信息、内容的权属或合法性、安全性、合规性、真实性、科学性、完整权、有效性等进行实质审查;无论平台是否已进行审查,用户均应自行承担因其传输的作品、信息、内容而可能或已经产生的侵权或权属纠纷等法律责任。本站所有资源不代表本站的观点或立场,基于网友分享,根据中国法律《信息网络传播权保护条例》第二十二与二十三条之规定,若资源存在侵权或相关问题请联系本站客服人员,点此联系我们。关于更多版权及免责申明参见 版权及免责申明

网友评论

我要评论